IoT Streaming Analytics with Kafka, Flink, Druid and Superset

💡 Introduction

In this new blog post (or github repo), we present a case study concerning one of our customer in the domain of Internet of Things (IoT). In this specific use-case, we want to build a dashboard to quickly identify which devices have a behaviour deviating from the measured norm. For instance, it could be connected doors located in a city, with an area having a network disruption causing all doors to reject access to users in this area. With streaming analytics, we can identify those events as they arrive and notify users or support about the disruption of service.

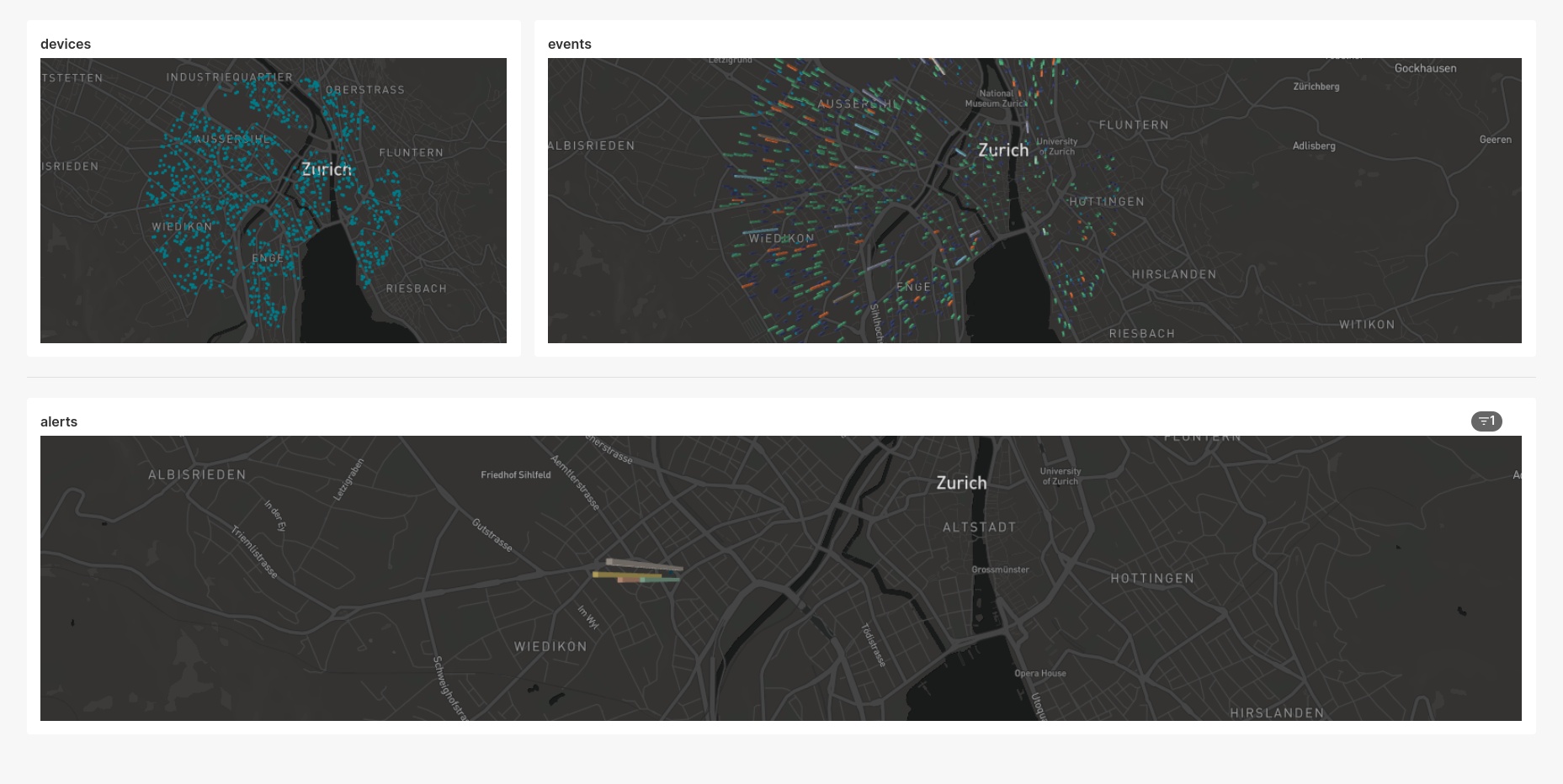

The end-product is a dashboard with live, streaming analytics. It appears as below.

We will be using the following technologies:

The architecture with Apache Kafka acting as central nervous system is described below.

We will be using Apache Kafka as our central data system with which all other services will interact to either consume (read) or produce (write) data. Druid will be our database to ingest and persist our Kafka topics and analytics, and Superset will be our BI tool to produce real-time dashboards querying against Druid. Flink will also join the fun as our stream-processing engine and will be given two jobs:

- Real-time join between 2 Kafka topics, serving as a topic-enrichment job.

- Alerting system, monitoring events, aggregating over time-windows and producing alerts to Kafka

Notice that we are using only Apache technologies so far; that is because we root for the open-source community 🤗.

This solution builds up on all our previous blog posts, that you can find here:

- Kafka 101 Tutorial - Getting Started with Confluent Kafka

- Kafka 101 Tutorial - Streaming Analytics with Apache Flink

- Kafka 101 Tutorial - Real-Time Dashboarding with Druid and Superset

🐳 Requirements

To get this project running, you will just need minimal requirements: having Docker and Docker Compose installed on your computer.

To get this project running, you will need the following:

- Docker

- Docker Compose to orchestrate the infrastructure.

- Miniconda to create a Python virtual environment and run the Python scripts.

- A Mapbox API key to have map data inside Superset. For that, you simply need to create a free account with Mapbox which will give you a free API key with more than enough credits.

The versions I used to build the project are

| |

If your versions are different, it should not be a big problem. Though, some of the following might raise warnings or errors that you should debug on your own.

🐍 Python Virtual Environment

To have all the python dependencies required to run the Kafka producers and other Python scripts inside this project, I would recommend setting up a virtual environment with Miniconda.

To do that, make sure you have Miniconda installed on your computer, which should give you the shell command conda.

From inside the project, type the following commands

| |

With this, you are good to go! Remember to always activate the virtual environment when you run Python scripts from this project.

🏭 Infrastructure

To have everything up and running, you will simply need to run the start.sh script, that will start the whole infrastructure for you.

| |

⚠️ You might have to make the

start.shscript executable before being allowed to execute it. If this happens, simply type the following command

| |

The Druid docker-compose file requires the name of the Docker network to run on. You will be prompted during the run of the start script. If you did not change the name of the default Docker network, then it should be <name-of-project-directoy>_default, so you can simply hit Enter when prompted for the name of the network. Otherwise, you can also type in the name of the Docker network you wish to connect to.

The start script will also prompt you for your Mapbox API key, so have it ready. In case you do not want to make the account with Mapbox, you can just type in a random string or nothing at all. Script execution and everything else will work, you just won’t have map data in the Superset dashboard.

At some point, the start script will prompt you to clean and reset the Druid database, and then to start the Python producers. Before continuing with the script execution, perform the sanity check below.

⚕️ Sanity Check

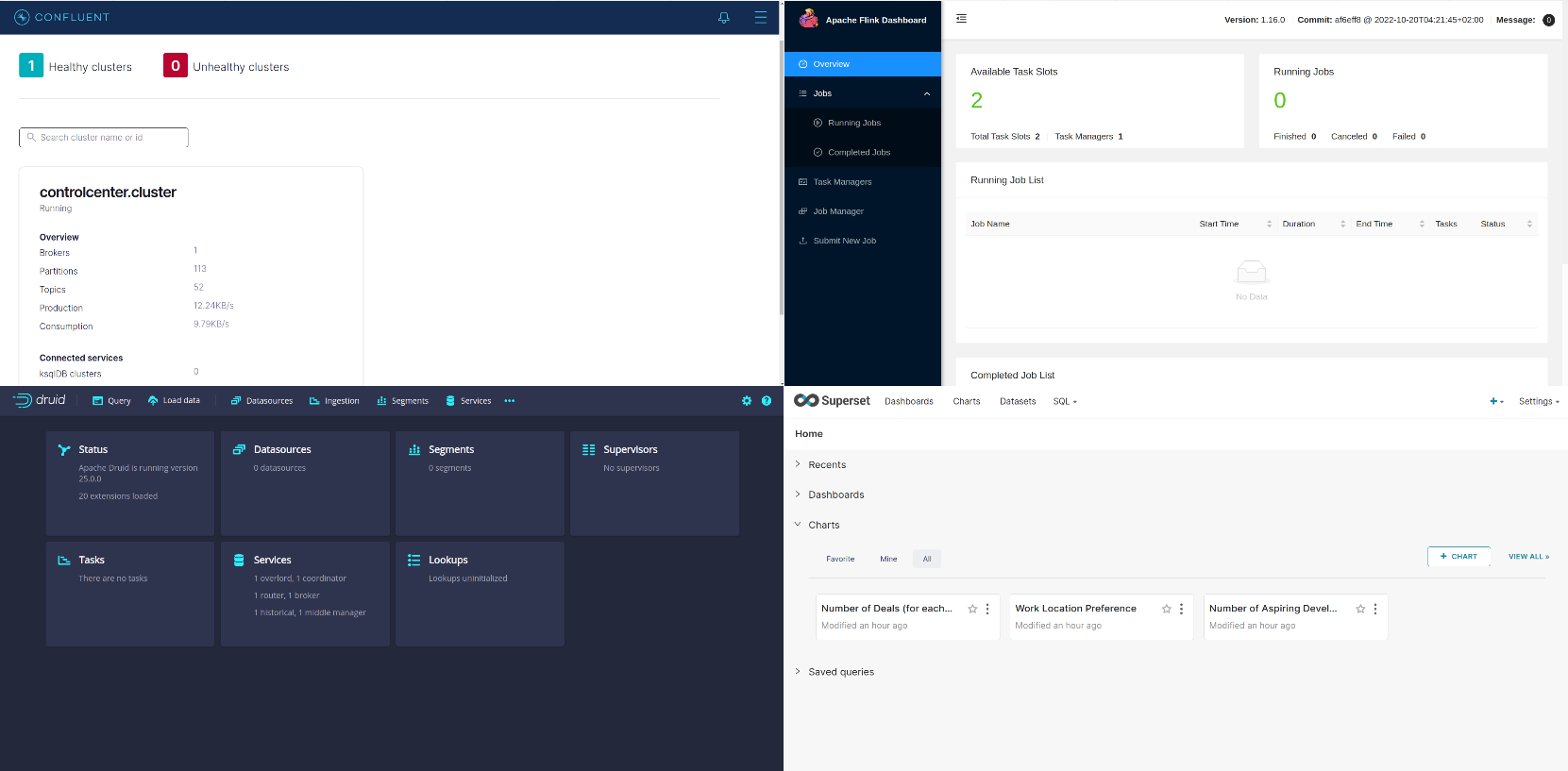

To check that all the services are up and running (you will see that a lot of Docker containers are running by now), visit the following urls and check that all the UIs load properly:

- Kafka: http://localhost:9021

- Flink: http://localhost:18081

- Druid: username is

druid_systemand password ispassword2http://localhost:8888 - Superset: username is

adminand password isadminhttp://localhost:8088

You should see something like

🖼️ Upload Superset Dashboard

In Superset, upload the dashboard located in ./superset_dashboard/IOT-STREAMING-ANALYTICS.zip to Superset.

After you checked that all services are up and running, we can start the synthetic data producers.

⏯️ Start Kafka Producers

At this stage, you should be prompted in the terminal to start the producers that produce data to the Kafka cluster. Open up a new terminal window and cd into the producers directory.

First, we will produce the devices data to the Kafka cluster. Data looks as follows

| |

with the device ID and its location in a nested JSON field. Type the following command to produce 1000 devices data points to Kafka.

| |

You should be able to see the data in the Kafka topic and in the Druid DEVICES table already. Also, the Superset dashboard should already show the devices on a map of Zürich.

Next step is to start producing some IoT events to Kafka, to simulate the devices sending operation notifications. To do so run the following command.

| |

This will produce normal events to the Kafka cluster in a background task. Normal events mean that doors mostly produce some accessGranted notifications. Now, we will introduce a network disruption in a part of the city (Wiedikon), where devices in this area will start producing accessRejected events only. Type the following command.

| |

🐿️ Start the Flink Jobs

Going back to the main terminal window, press Enter once you have run the producers code, and press Enter again when prompted to start the Flink jobs.

At this stage, the dashboard should show multiple charts, with a clearly identifiable area of disruption, where the count of accessRejected events was abnormally high for a period of time.

☠️ Tear the Infrastructure Down

When you are done playing with the project, follow the step below to stop the whole infrastructure.

| |

Again, make sure that the stop.sh script in the iot_streaming_analytics folder is executable.

📌 Conclusion

In this blog post, we demonstrated the solution we implemented for a customer, to perform streaming analytics and produce real-time insights using Kafka, Flink, Druid and Superset.

📝 Like what you read?

Then let’s get in touch! Our engineering team will get back to you as soon as possible.