Kafka 101 Tutorial - Getting Started with Confluent Kafka

We show how to run a local Kafka cluster using Docker containers. We will also show how to produce and consume events from Kafka using the CLI.

The Infrastructure

The cluster is set up using Confluent images. In particular, we set up 4 services:

- Zookeeper

- Kafka Server (the broker)

- Confluent Schema Registry (for use in later article…)

- Confluent Control Center (the UI to interact with the cluster)

Note that Kafka 3.4 introduces the capability to move a Kafka cluster from Zookeeper to KRaft mode. At the time this article is written, Confluent still has not released the new Docker image with Kafka 3.4. As such, we still use Zookeeper in this tutorial. For a discussion about Zookeeper and KRaft, refer to this article.

Services will be powered up and orchestrated using docker-compose. Let us quickly review the configurations.

Zookeeper

As usual, we need to attach Zookeeper to our Kafka cluster. Zookeeper is responsible for storing metadata regarding the cluster (e.g. where partitions live, which replica is the leader, etc…). This “extra” service that always needs to be started alongside a Kafka cluster will soon be deprecated; as metadata management will be fully internalized in the Kafka cluster, using the new Kafka Raft Metadata mode, shortened to KRaft.

Confluent’s implementation of Zookeeper provides a few configurations, available here.

In particular, We need to tell Zookeper on which port to listen to connections from clients, in our case Apache Kafka. This is configured with the key ZOOKEEPER_CLIENT_PORT. Once this port is chosen, expose the corresponding port in the container. This configuration alone is enough to enable communication between the Kafka cluster and Zookeeper. The corresponding configuration is available below, as used in our docker-compose file.

| |

Kafka Server

We also need to configure a single Kafka broker, with a minimum viable configuration. We need to specify the port mappings, and the networking settings (Zookeeper, advertised listeners, etc…). In addition, we set some basic logging and metrics configurations.

Details about the configuration can be found on the Confluent website; and all configurations can be found here.

| |

Start the Cluster

To start the cluster, start by cloning the repo; and cd into the repository, locally.

Make sure that ports that will be mapped from localhost are not already used; and that you do not have running containers with same names as the ones defined in our docker-compose.yaml file (check the

container_nameconfiguration key).

| |

To start the cluster, simply run the command

| |

Depending on the Docker version you have, the command might be

| |

To check that all services are started, type the command

| |

The output should be

| |

You should now be able to access the control-center container, which is the Confluent UI for Kafka cluster management on localhost:9021. Please refer to online ressources for a guided tour of Confluent control center.

Produce and Consume Messages using the CLI

With Kafka, there are the notions of Producers and Consumers. Simply put, producers are client applications writing data to the cluster, and consumers are applications reading data from the cluster. Consumers are ultimately doing the work from the data they read (e.g. a Flink application would be a consumer).

Confluent provides CLI tools to produce and consume messages from the command line. In this section, we will see the following:

- Create a topic

- Write (produce) to the topic

- Read (consume) from the topic

To access the CLI tools, we need to enter the broker container.

| |

Create a Topic

For this example, we will create a simple topic in the cluster, with default configurations. We will create a topic named my-amazing-topic with a replication factor of 1 and a partitioning of 1. This means that messages will be not be replicated (1 message is only stored in one server) and will not be partitioned (1 partition is same as no partitioning). This means that the topic will be sharded in 1 log.

To instantiate this topic; run the following command from within the broker container

| |

If the command succeeds, it will output

| |

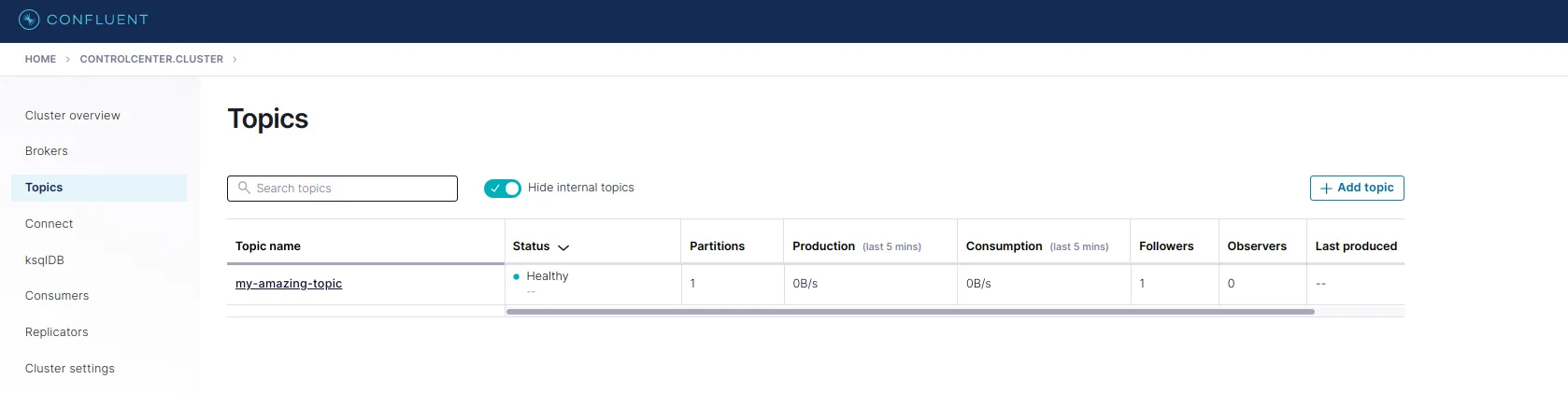

One can also check that the topic was successfully created by navigating to the Topics tab of the web UI; where the newly created topic should be listed with the Healthy status.

Produce to the Topic

Now that we have a topic created with a default configuration, we can start producing records to it! Still from within the container, run the following command, and send your messages.

| |

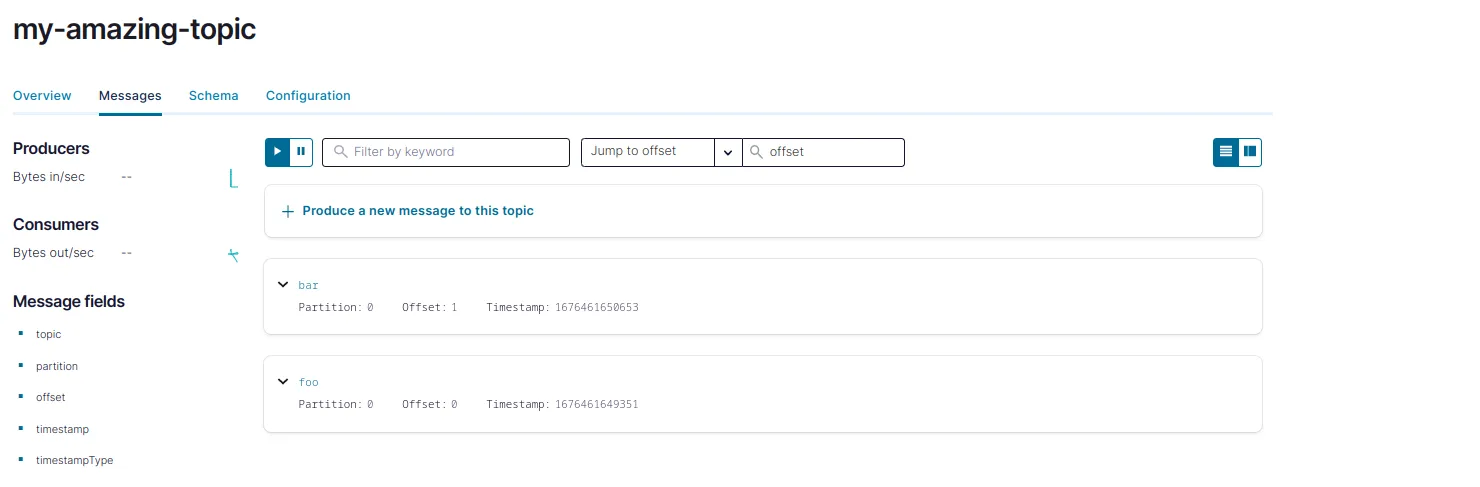

This command will produce 2 messages to the topic my-amazing-topic without a key, and with values foo and bar, some strings.

One can see that the messages were produced to the topic and are persisted in the topic by navigating to the Topics tab.

If you click on the Schema tab, you will notice that no schema is present. This means that the topic can contain records with different schema, like strings or json strings. No schema is enforced; which is obviously not a good practice in production; hence the need for the schema-registry container. But do not worry about it now, we will touch that point in our next blog post where we will be building a small producer application pushing Avro records to Kafka, with schema validation.

Consume from the Topic

The final step is to consume the messages we just produced, from the topic. To do that, type the following command from within the container

| |

Kill the Cluster

Once you have played enough with your Kafka cluster, you might want to bring it down. To do this, cd into this project repo again and docker-compose down the infrastructure.

| |

What is Coming Next?

In a next blog post, we will see how to - starting from this vanilla Kafka infra - produce avro records to Kafka. Stay tuned!

📝 Like what you read?

Then let’s get in touch! Our engineering team will get back to you as soon as possible.