Kafka 101 Tutorial - Real-Time Dashboarding with Druid and Superset

💡 Introduction

In this new blog post (or github repo), we build on everything we have seen so far in this Kafka 101 Tutorial series, namely:

and we will see how to ingest our streaming data into a real-time database: Apache Druid! In addition, we will see how to visualize the data that we produce (either from our raw stream of events or our Flink-aggregated stream) using real-time dashboards supported by Apache Superset. Notice that we are using only Apache technologies so far; that is because we root for the open-source community 🤗.

The two new services we introduce in this article are a bit less known than what we have used so far, so I am just going to introduce them briefly.

🧙♂️ Apache Druid

- Druid is a column-oriented data store, which means that it stores data by column rather than by row. This allows for efficient compression and faster query performance.

- Druid is optimized for OLAP (Online Analytical Processing) queries, which means it’s designed to handle complex queries on large data sets with low-latency response times.

- Druid supports both batch and real-time data ingestion, which means that it can handle both historical and streaming data.

- Druid includes a SQL-like query language called Druid SQL, which allows users to write complex queries against their data.

- Druid integrates with a number of other data processing and analysis tools, including Apache Kafka, Apache Spark, and Apache Superset.

♾ Apache Superset

Apache Superset is a modern, open-source business intelligence (BI) platform that allows users to visualize and explore their data in real time.

- Superset was originally developed by Airbnb, and was later open-sourced and donated to the Apache Software Foundation.

- Superset is designed to connect to a wide variety of data sources, including databases, data warehouses, and big data platforms.

- Superset includes a web-based GUI that allows users to create charts, dashboards, and data visualizations using a drag-and-drop interface.

- Superset includes a wide variety of visualization options, including bar charts, line charts, scatterplots, heatmaps, and geographic maps.

- Superset includes a number of built-in features for data exploration and analysis, including SQL editors, data profiling tools, and interactive pivot tables.

🐳 Requirements

To get this project running, you will just need minimal requirements: having Docker and Docker Compose installed on your computer.

The versions I used to build the project are

| |

If your versions are different, it should not be a big problem. Though, some of the following might raise warnings or errors that you should debug on your own.

🏭 Infrastructure

To have everything up and running, you will need to have the whole producer part running (the repo from the previous article) and Druid and Superset. To do this, do the following:

| |

⚠ You might have to make the

start.shscript executable before being allowed to execute it. If this happens, simply type the following command

| |

The Druid docker-compose file assumes that the Kafka cluster is running on the Docker network flink_sql_job_default. It should be the case if you cloned the flink_sql_job repo and started the infra using the commands listed before. Otherwise, simply adjust the references to the flink_sql_job_default Docker network in the docker-compose file.

Sanity Check

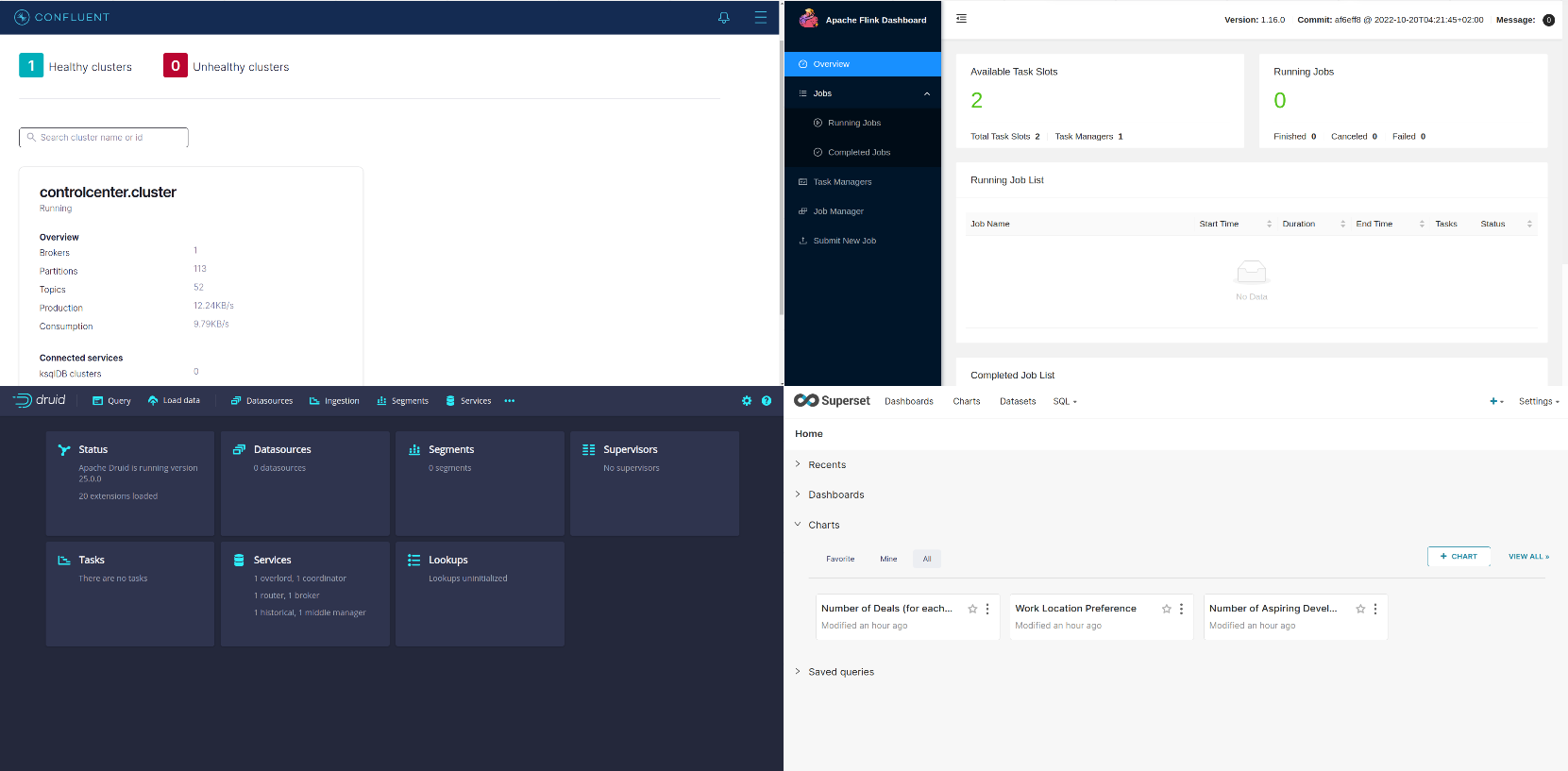

To check that all the services are up and running (you will see that a lot of Docker containers are running by now), visit the following urls and check that all the UIs load properly:

- Kafka: http://localhost:9021

- Flink: http://localhost:18081

- Druid: username is

druid_systemand password ispassword2http://localhost:8888 - Superset: username is

adminand password isadminhttp://localhost:8088

You should see something like

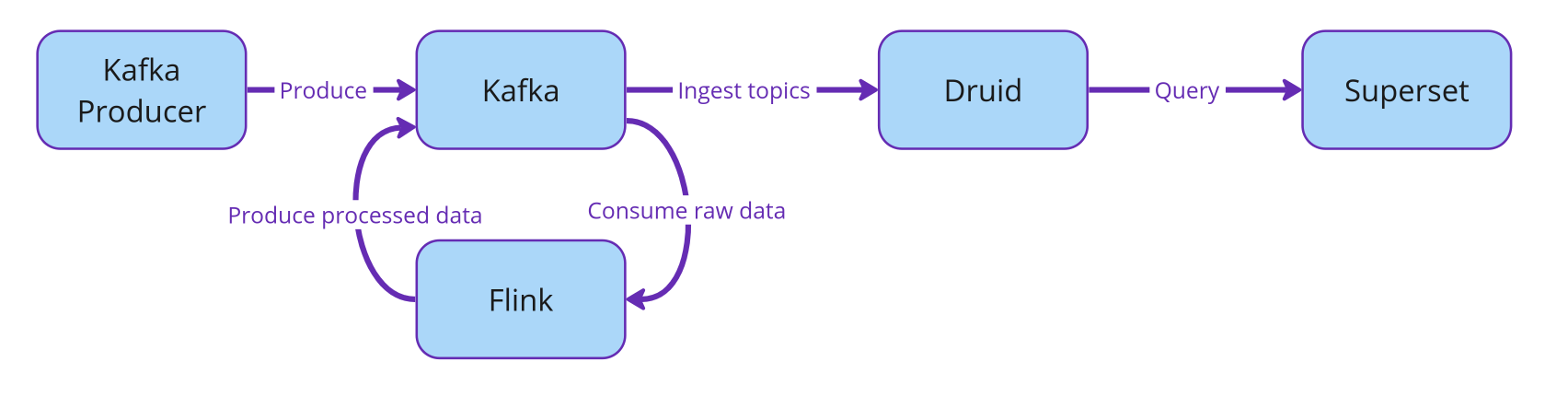

The relationship between all the services is illustrated with the flow diagram below.

In the following sections, we will see how to setup the stream ingestion by Apache Druid from our Kafka cluster; and then how to link Druid to Superset to create real-time dashboards!

🌀 Druid Stream Ingestion

The Kafka producer we started produces messages in the SALES topic. These are fake sales events produced every second with the following schema

| |

First thing we will do is connect Druid to our Kafka cluster to allow the streaming ingestion of this topic and store it in our real-time database.

We will do this using Druid’s UI at http://localhost:8888. Below we show how to create the streaming ingestion spec step-by-step, but note that the ingestion spec can simply be posted using Druid’s rest API as a json.

Design the Stream Ingestion Spec

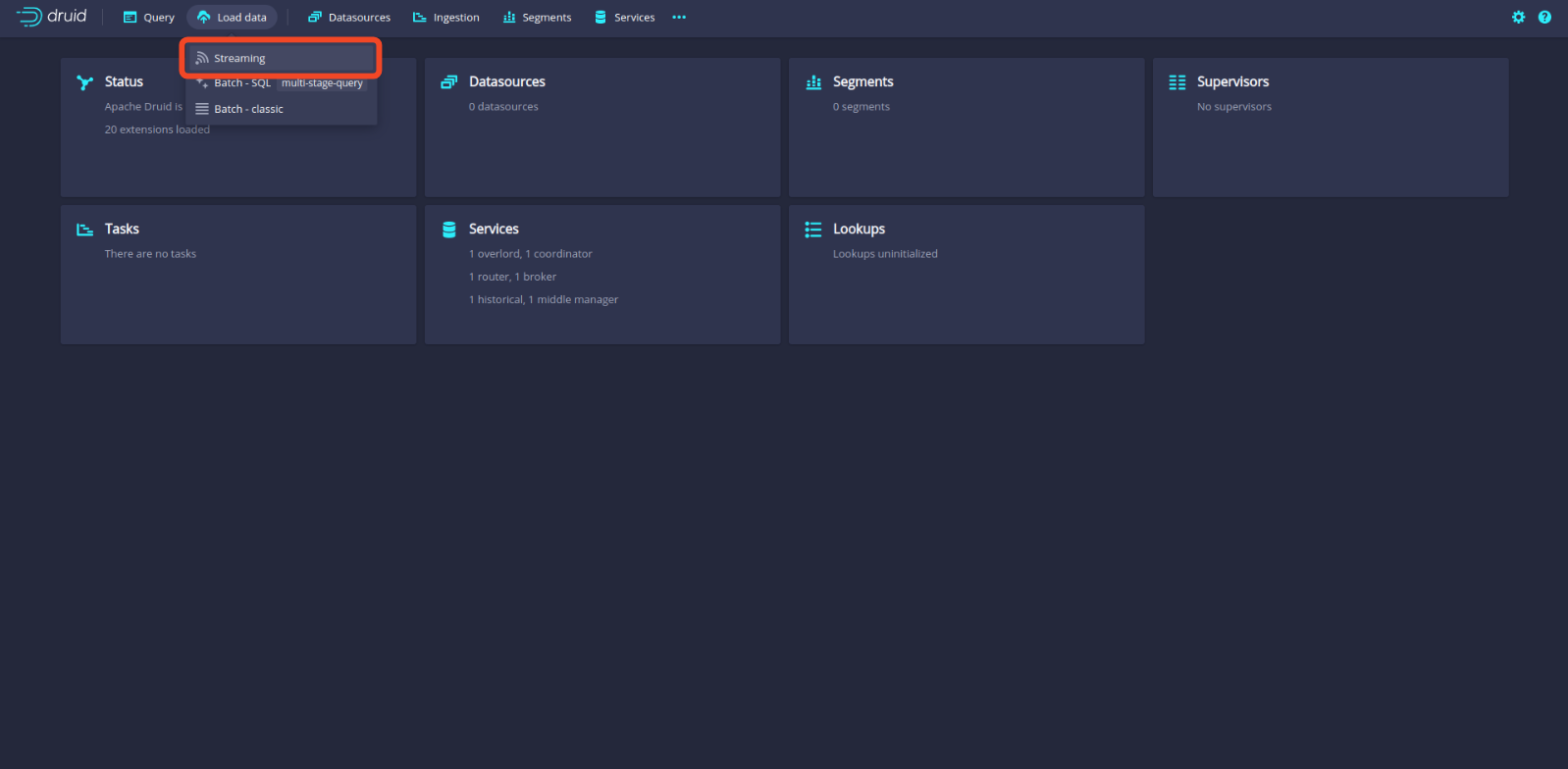

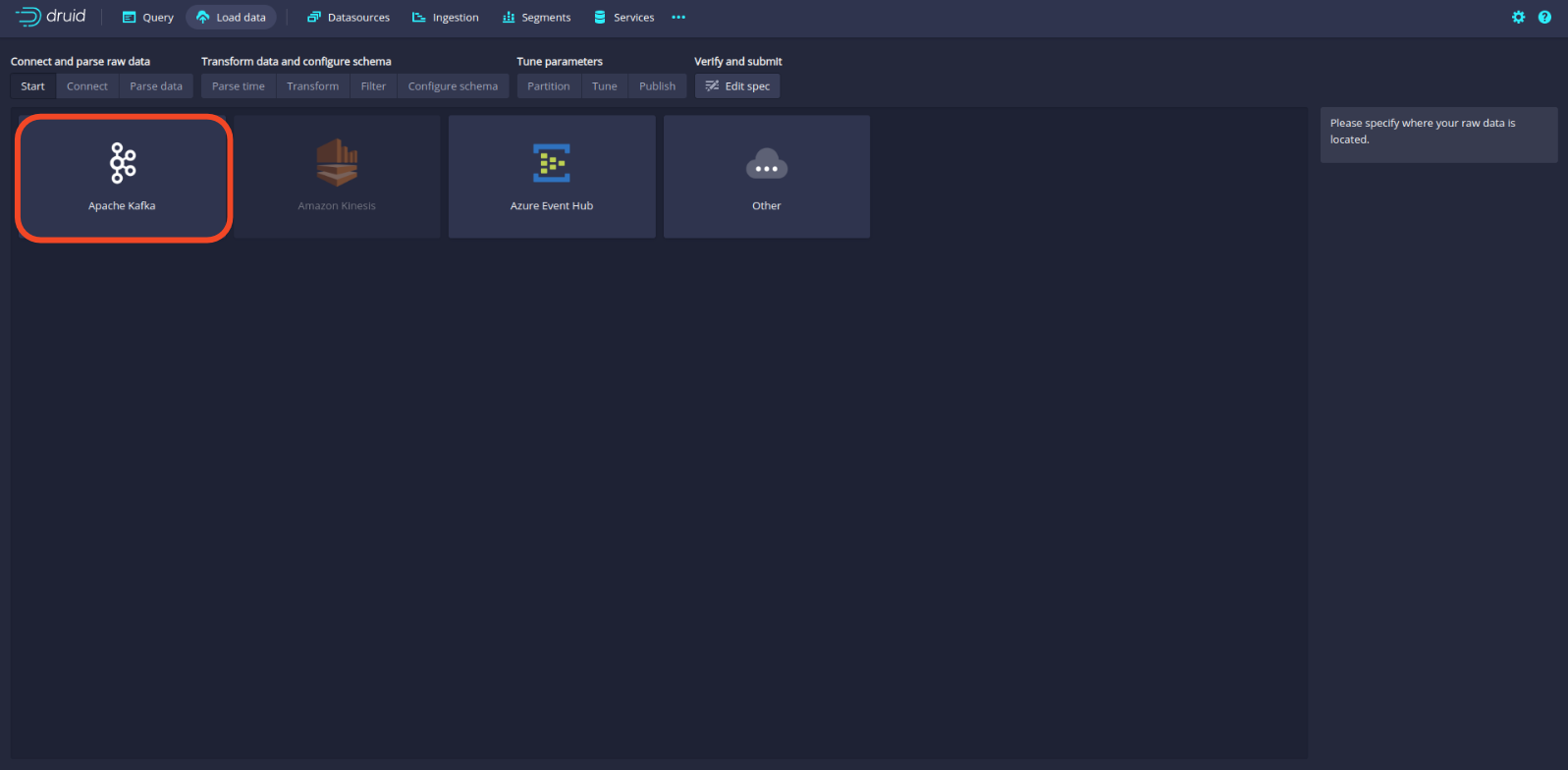

The first step is to click the Load data button and select the Streaming option.

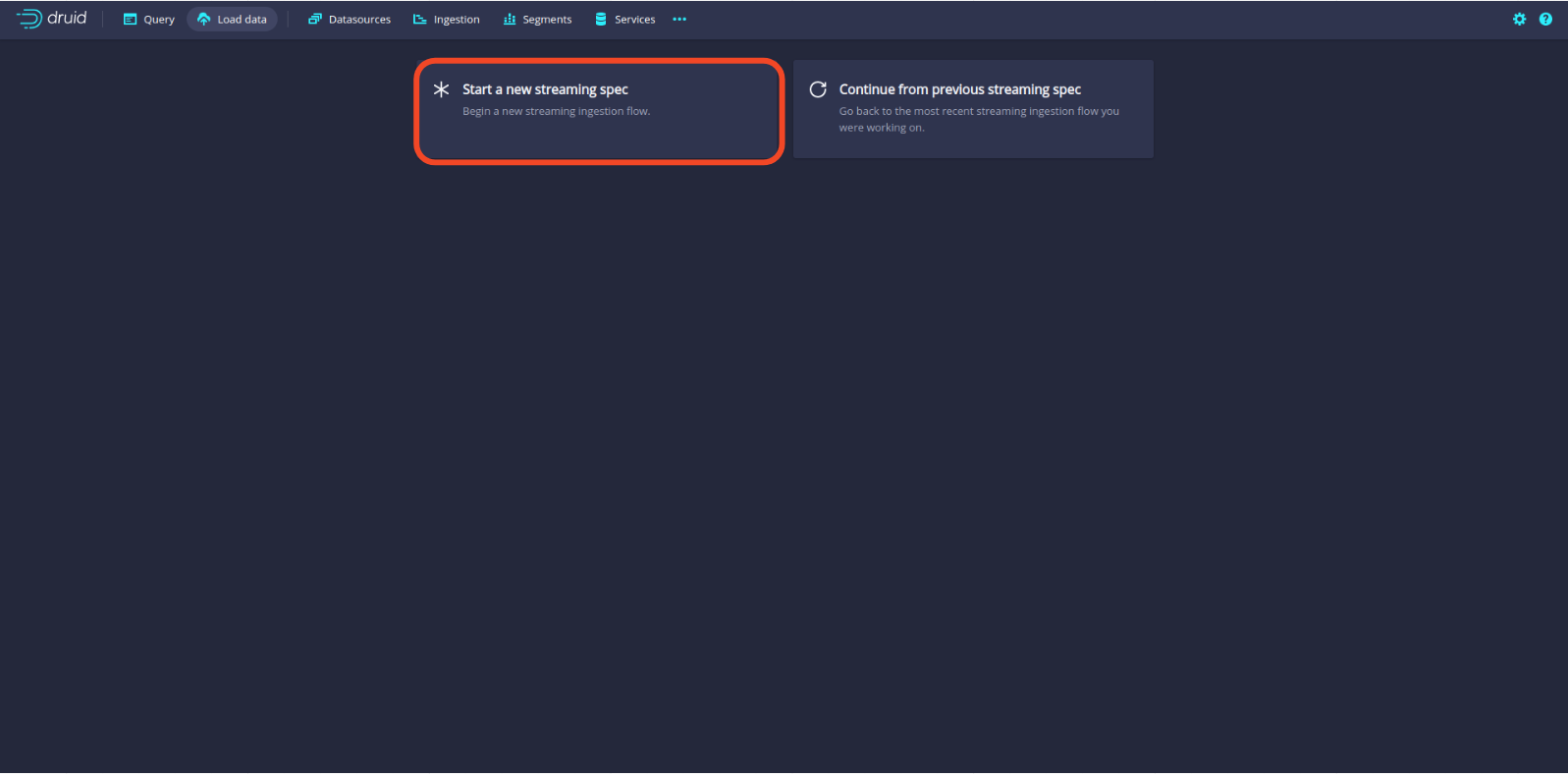

Then select Start a new streaming spec to start writing our custom streaming spec.

Once this is done, select Apache Kafka as the source.

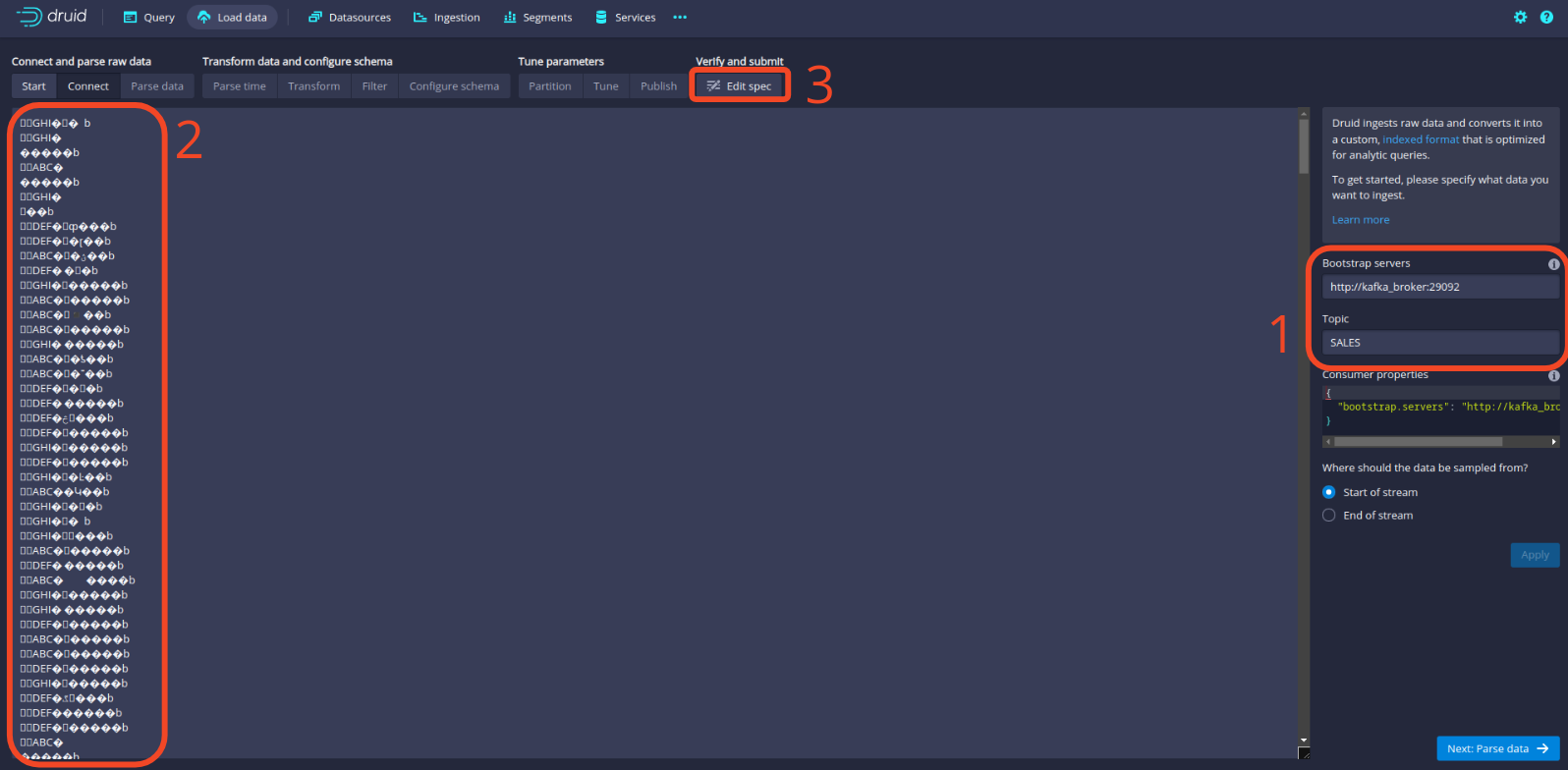

Once this is done, write the address of the Kafka broker and the topic name and click to apply the configuration. You should then see on the left sample data points from our SALES topic. Note that the data is not deserialized as this is the raw data coming from Kafka. We will need to include the Avro deserializer. To do this, click the Edit spec button.

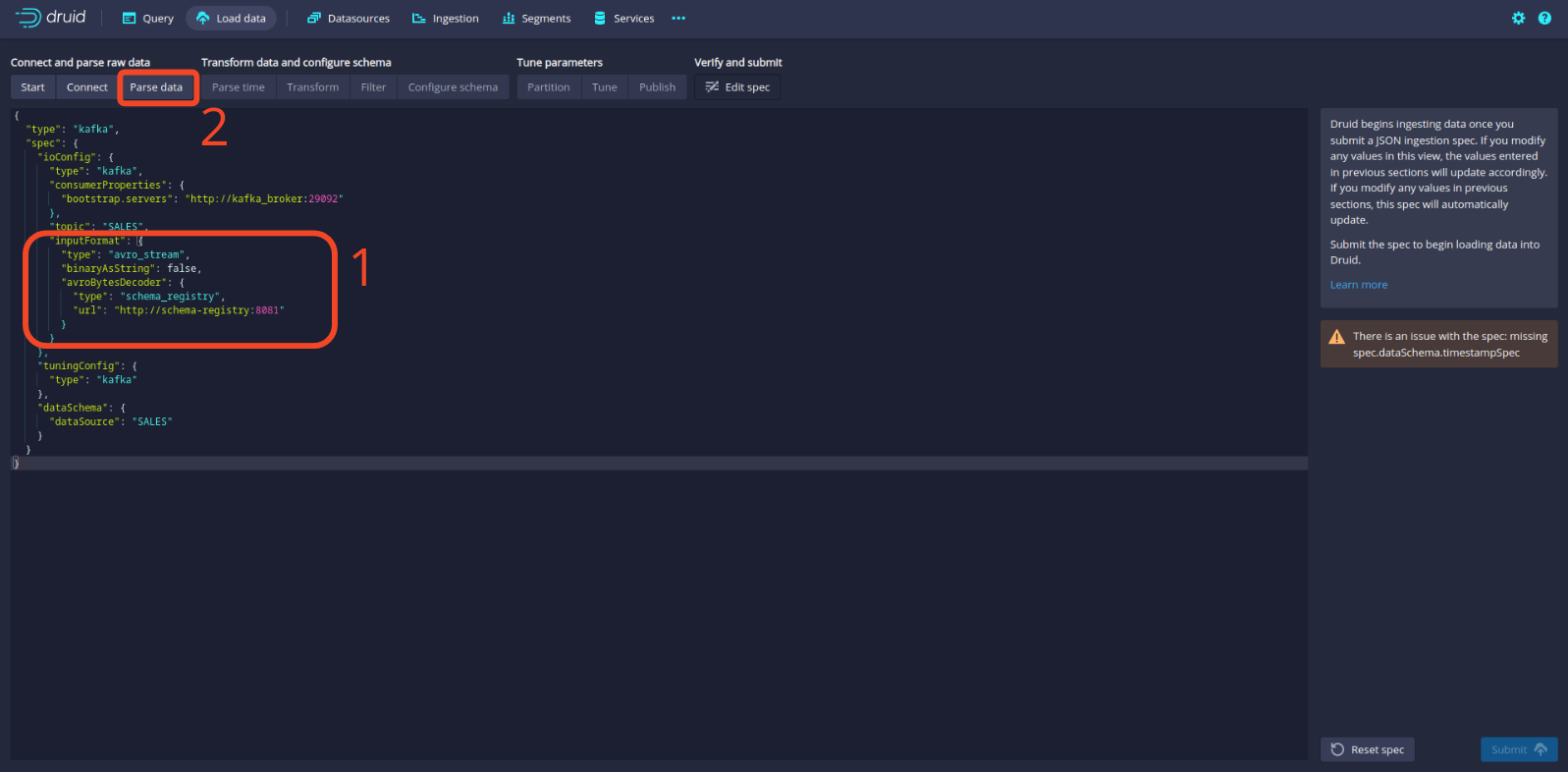

In the spec config, add the following (thank you Hellmar):

| |

and then click the Parse data button to go back to editing the spec.

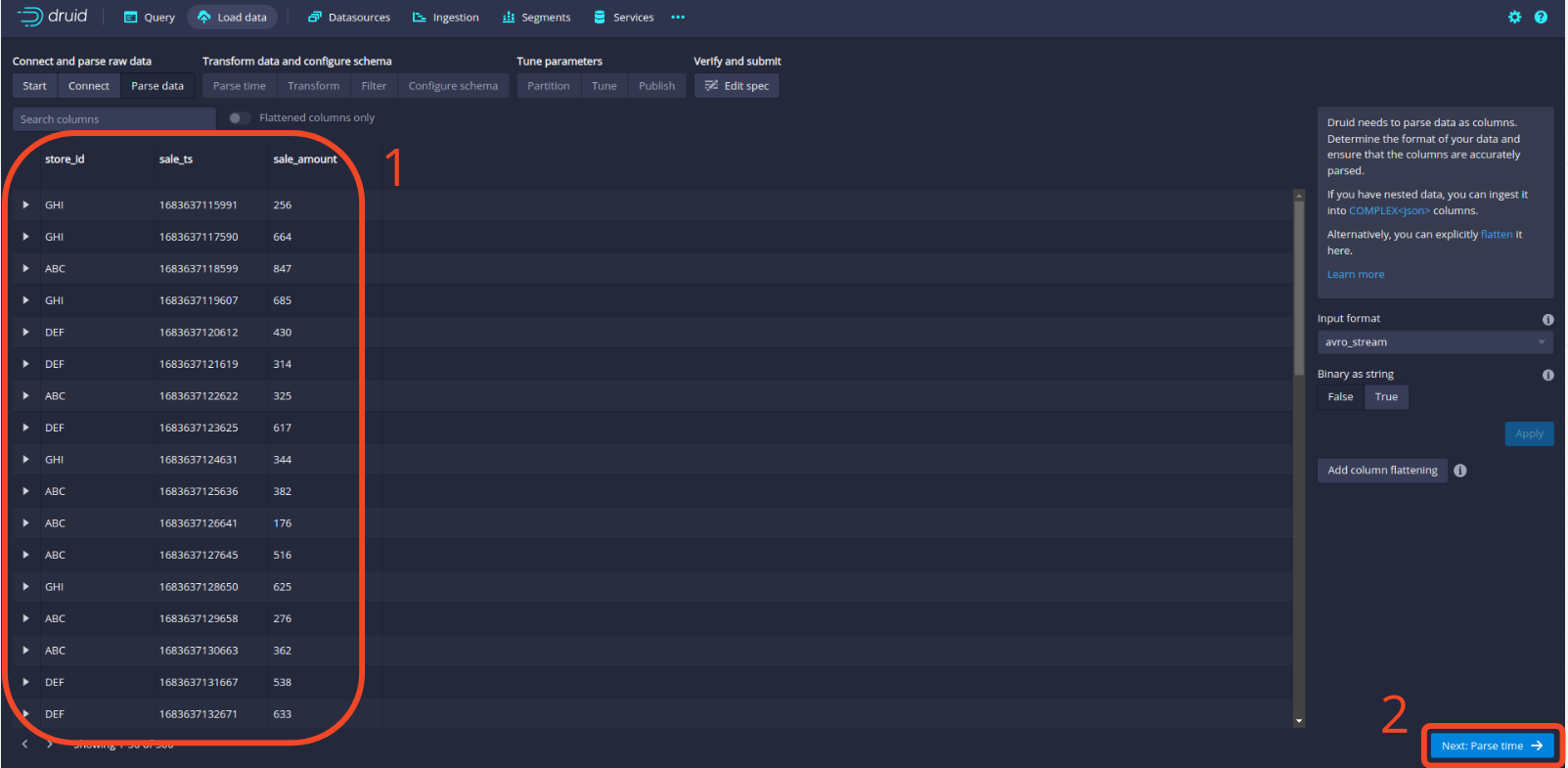

Now you should see our data being properly deserialized! Click the Parse time button to continue.

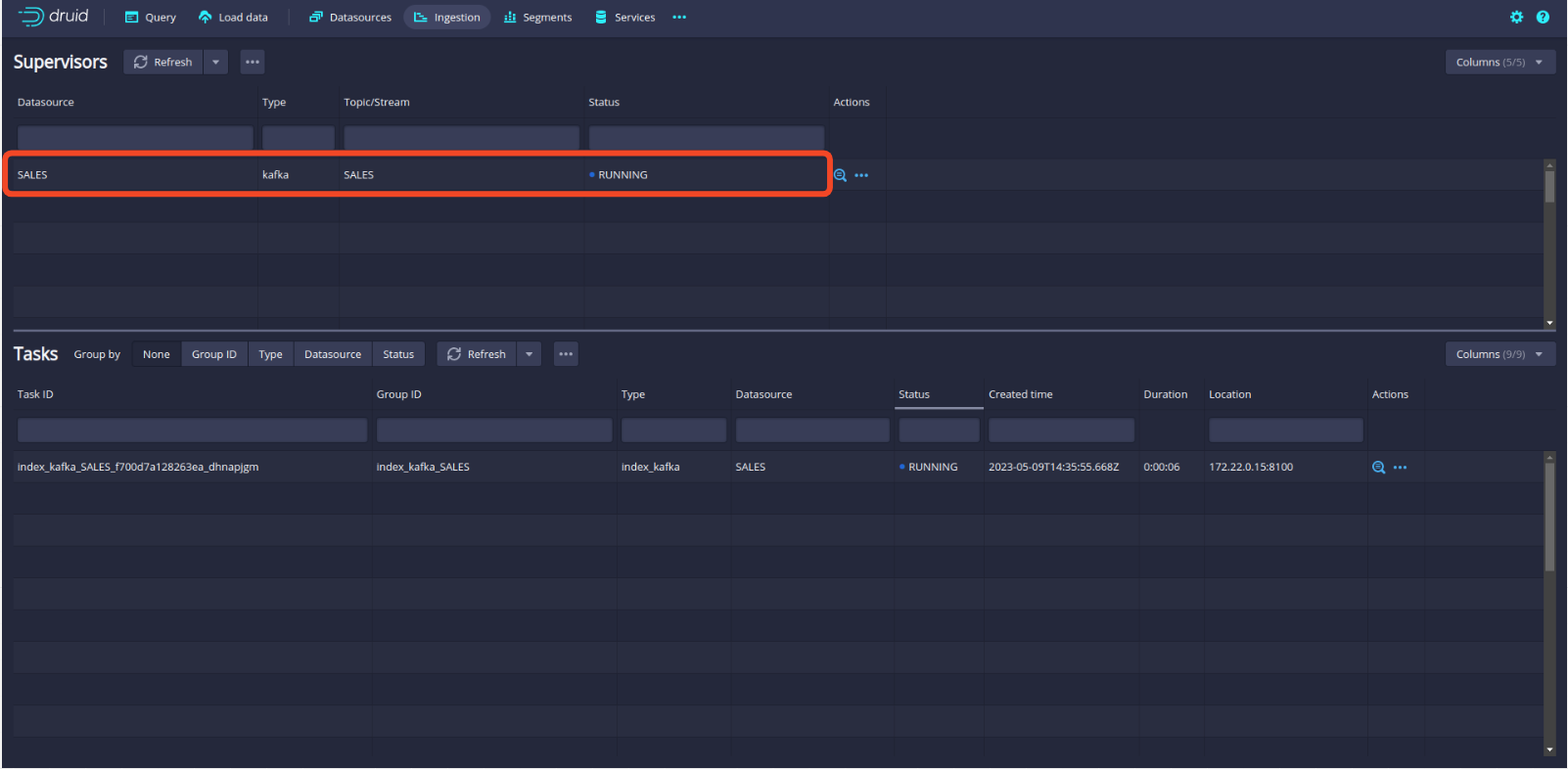

From now on, there is not much more to edit, simply click through the panels until the streaming spec is submitted. Druid’s UI will then automatically redirect to the Ingestion tab where you should see the following, indicating that Druid is successfully consuming from Kafka.

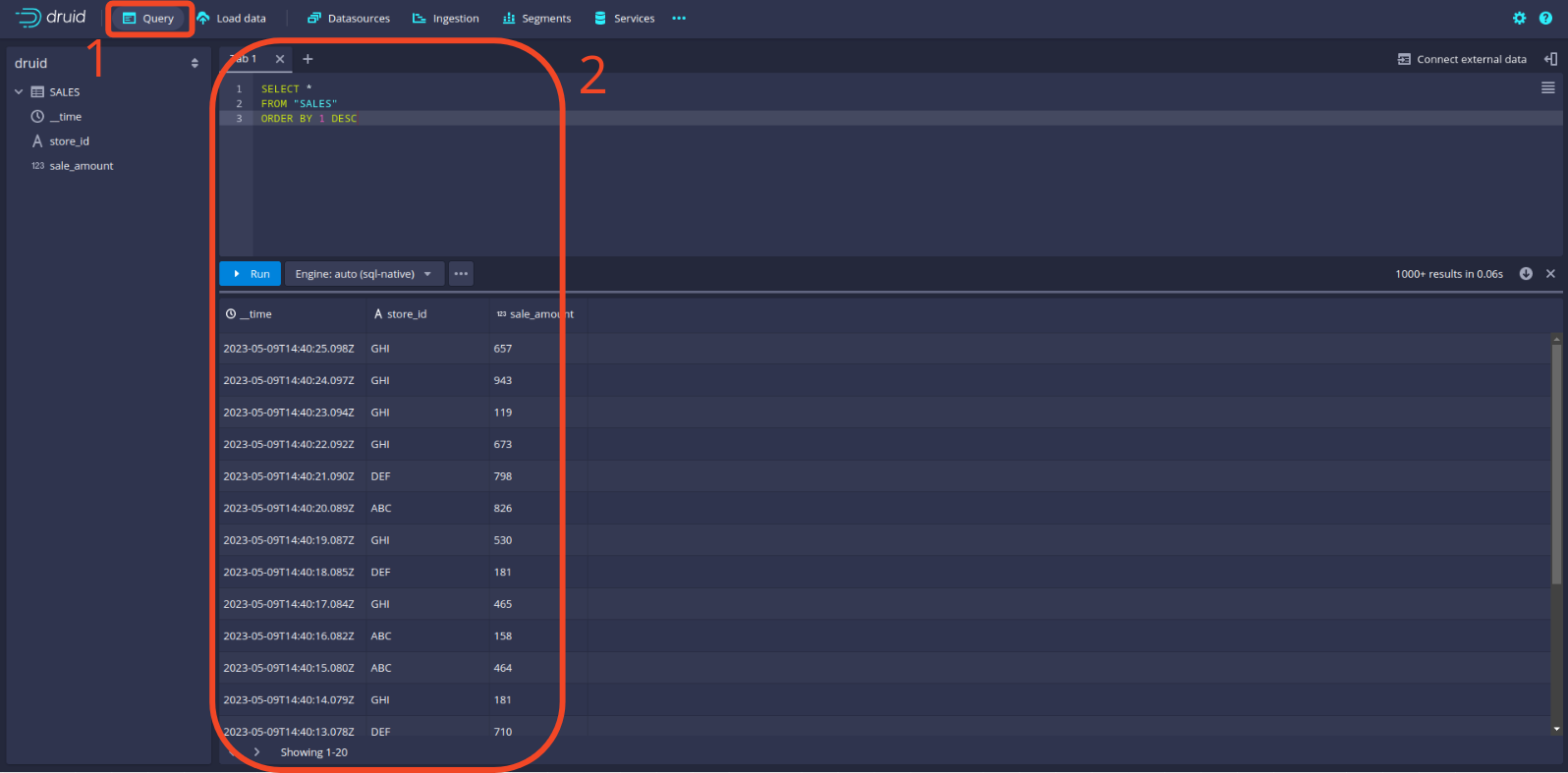

Finally, to check that data is properly loaded in Druid, click the Query tab and try a mock query. See example below.

🎉 The stream ingestion is setup and now Druid will keep listening to the SALES Kafka topic.

🖼 Data Visualization in Superset

Now that the data in successfully being ingested in our streaming database, it is time to create our dashboard to report sales to management!

Navigate to the Superset UI at localhost:8088.

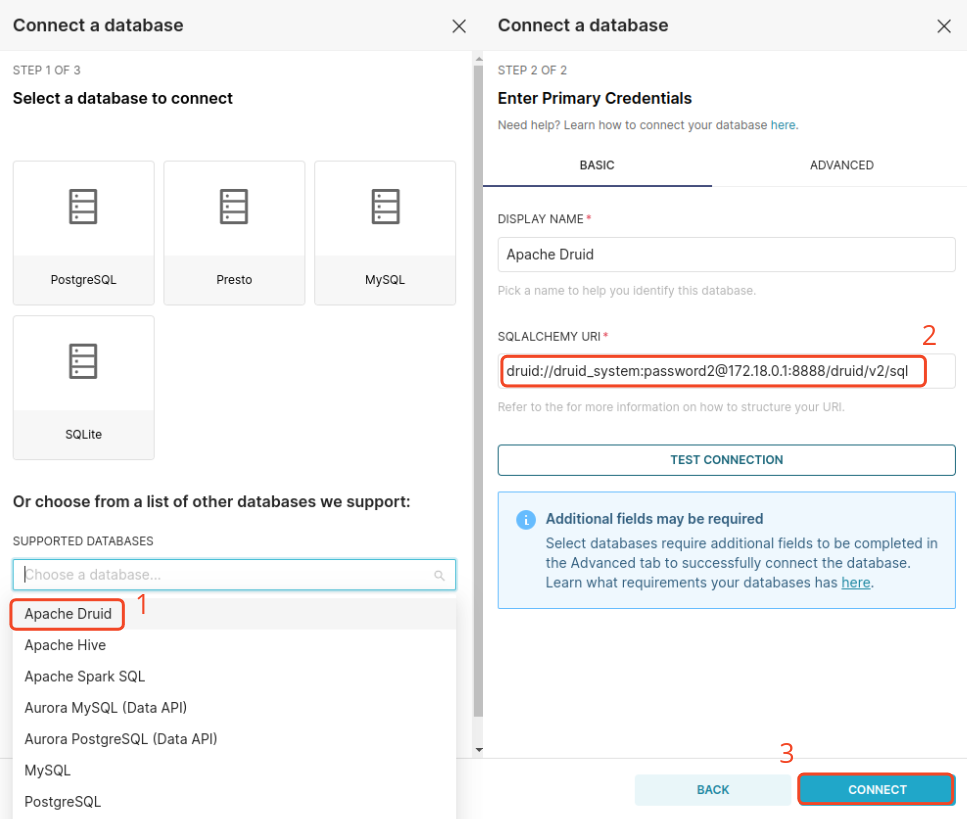

First thing we need to do is to connect our Druid database to Superset. To do this, click the + button in the upper-right corner and select Data and Connect database. Then, follow the steps shown below. Note that the connection string contains the following:

- database type:

druid - database credentials:

druid_systemandpassword2 - database sql endpoint:

172.18.0.1:8888/druid/v2/sql

Because Superset is not running on the same Docker network as the other services, we have to access it using the host. This will change depending on your OS. See Superset documentation.

Basically, the following should apply:

host.docker.internalfor Mac or Ubuntu users172.18.0.1for Linux users

Create Dashboard

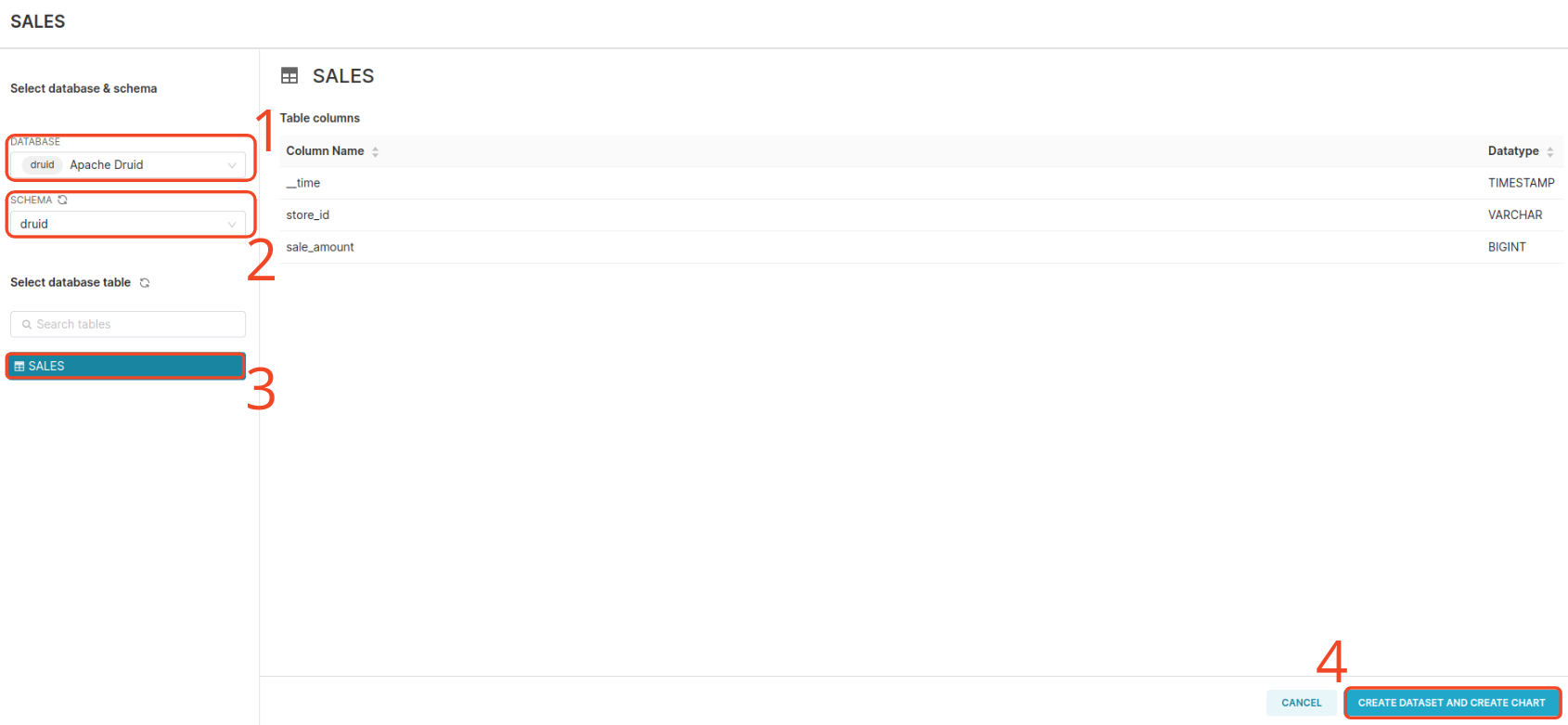

In Superset, a Dashboard is made of Charts; so the first thing is to create a Chart. To create a Chart we need to create a Dataset object. Click the Dataset button and follow the steps below to create a Dataset from the SALES topic.

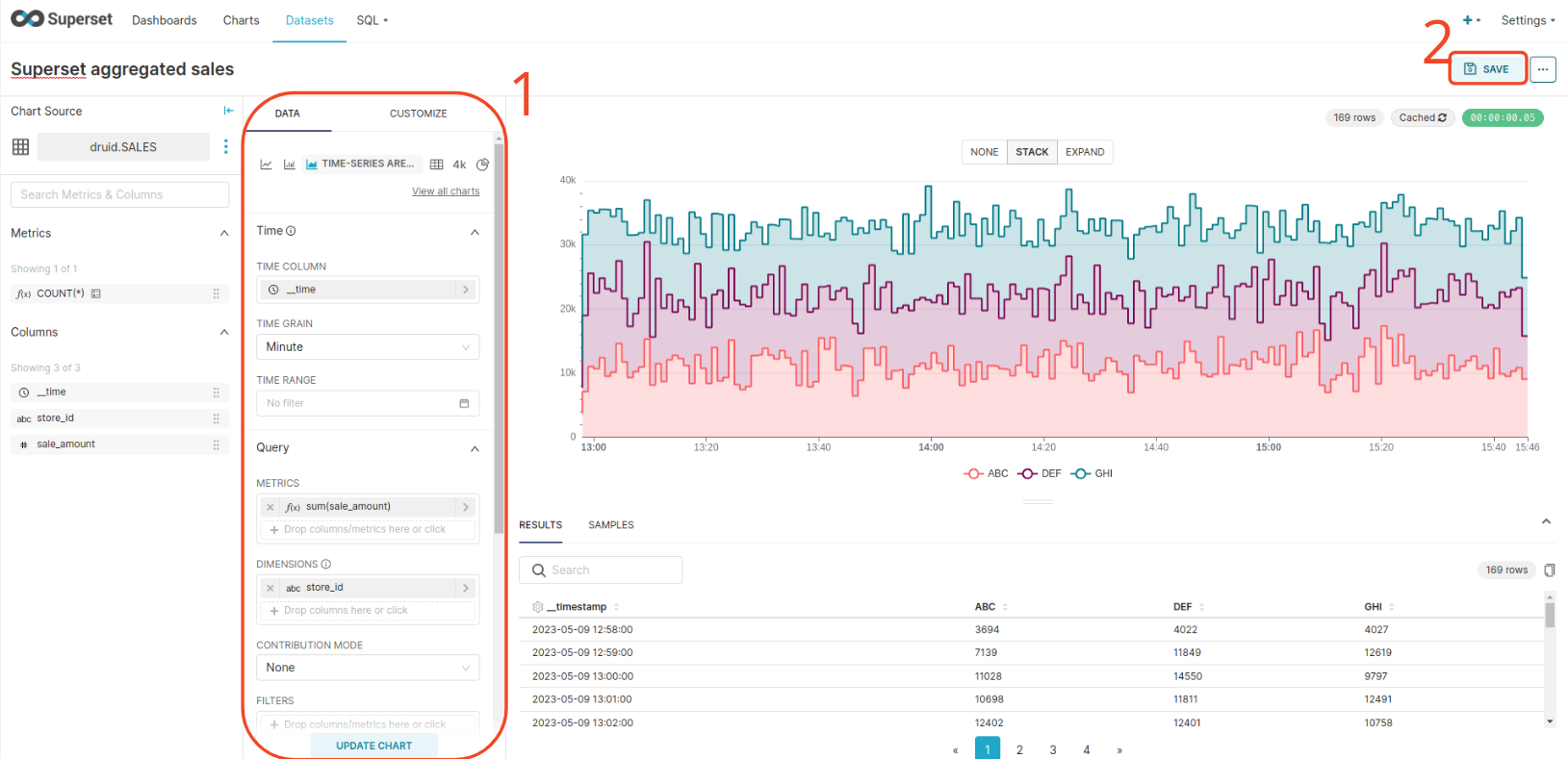

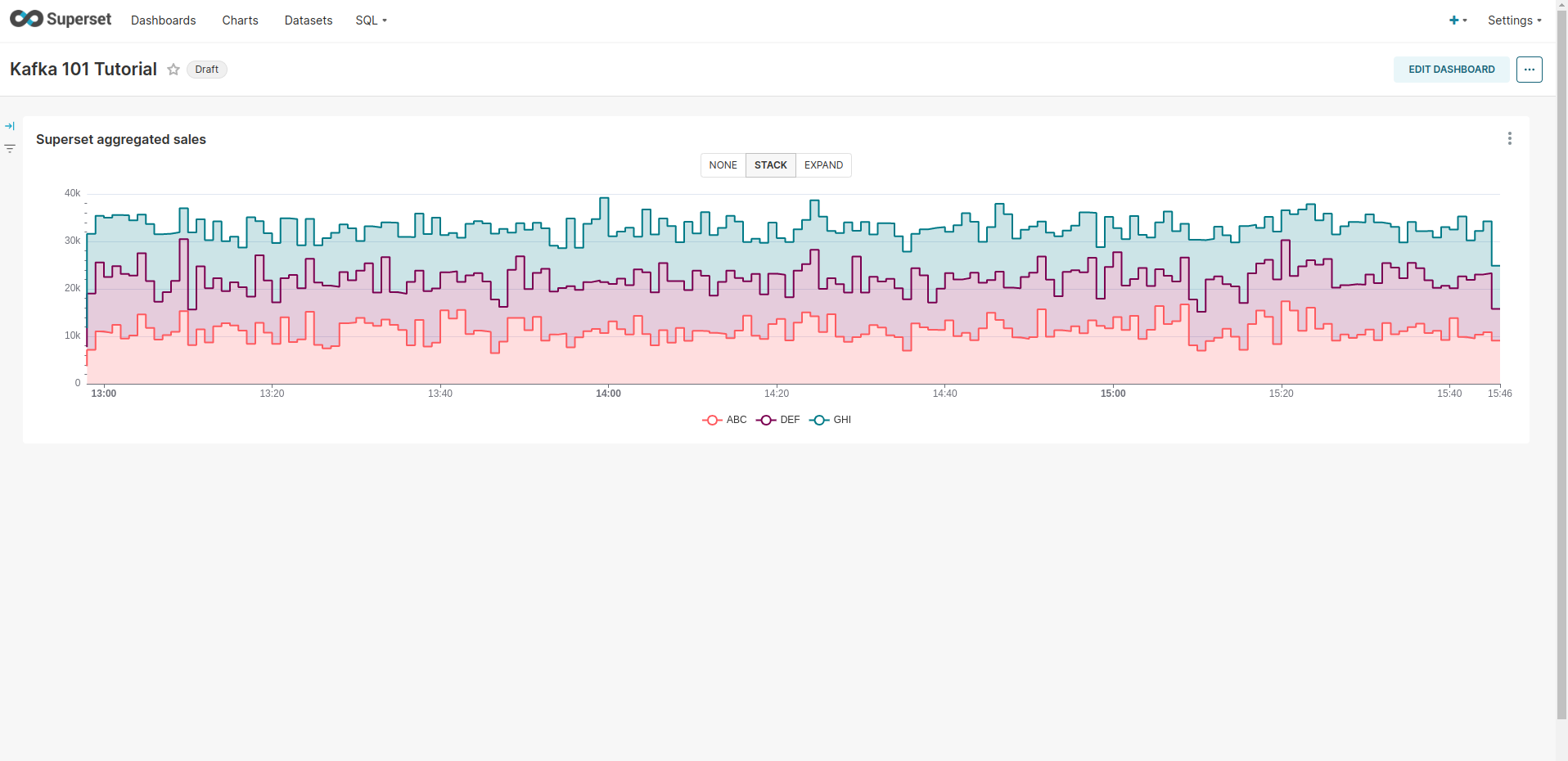

Create a time-series area chart from the SALES dataset and add a couple of configurations to the chart, as shown below. Then save the Chart and add it to a new Dashboard.

🐿 Throw in some Apache Flink

You will note that the Superset Chart that we created does the 1-Minute aggregation that our Flink job also does. Let us directly consume the aggregated data from Apache Flink now, and see if both aggregations report the same figures.

The steps to follow:

- Start the Flink job

- Write the streaming ingestion spec into Druid from the new topic

- Create new chart in Superset and add it to the dashboard

Start the Flink job

To start the aggregating Flink job that computes aggregated sales per store per window of 1 minute, type the following commands:

| |

You will see in Kafka that a new topic SALES_AGGREGATE is created by the Flink job.

Now repeat the operations related to Druid and Superset to ingest the data in Druid and create the Chart in Superset.

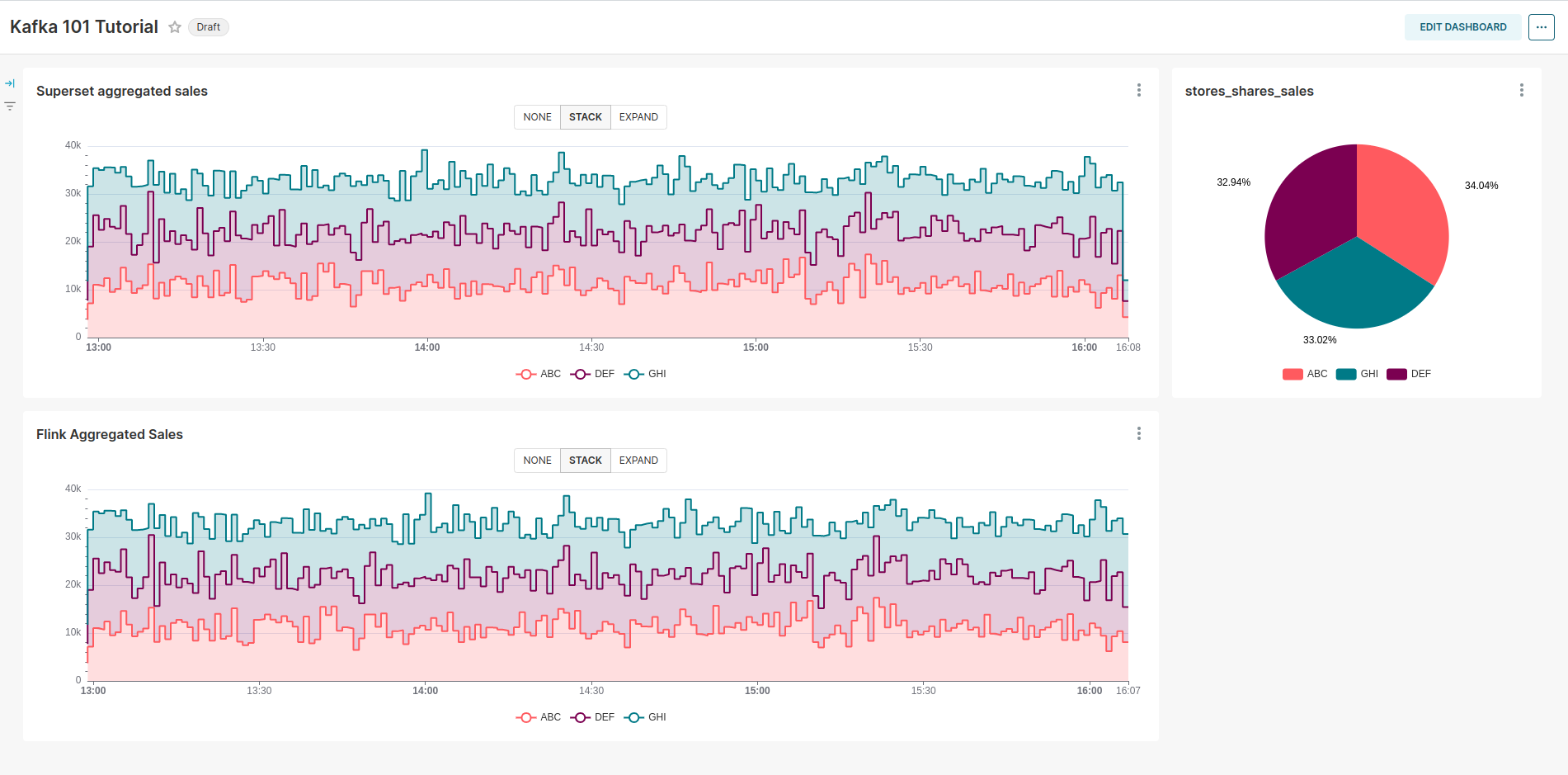

See the dashboard below

As expected, the two charts are identical, as the same aggregation is performed; but in one case the aggregation in performed in Superset, and in the other case it is performed via Apache Flink, and Superset simply reads the data from the database without having to perform any work. In addition we created a pie-chart that shows the share of each store in the total amount of sales. As expected, this is close to a uniform distribution of the sales as each store generates sales from the same uniform distribution.

☠ Tear the Infrastructure Down

When you are done playing with Superset, follow these steps to stop the whole infrastructure. We will first bring Druid and Superset down, and then shut Flink, Kafka and the Avro records producer. The commands are shown below.

| |

Again, make sure that the stop.sh script in the real_time_dashboarding folder is executable.

📌 Conclusion

In this blog post, we covered the full data pipeline from end to end, from raw data creation with the Kafka producer, producing high quality data records to Kafka using Avro schema and the schema registry. Then we have the streaming analytics pipeline with the Flink cluster performing time aggregation on the raw data and sinking back to Kafka. We have also covered how to get started with Apache Druid as a real-time OLAP database that allows persiting the records from Kafka, and fast-querying. Lastly, we saw how to connect our streaming database to Superset to produce insightful visualizations and dashboards. Superset acts here as our BI tool.

📝 Like what you read?

Then let’s get in touch! Our engineering team will get back to you as soon as possible.